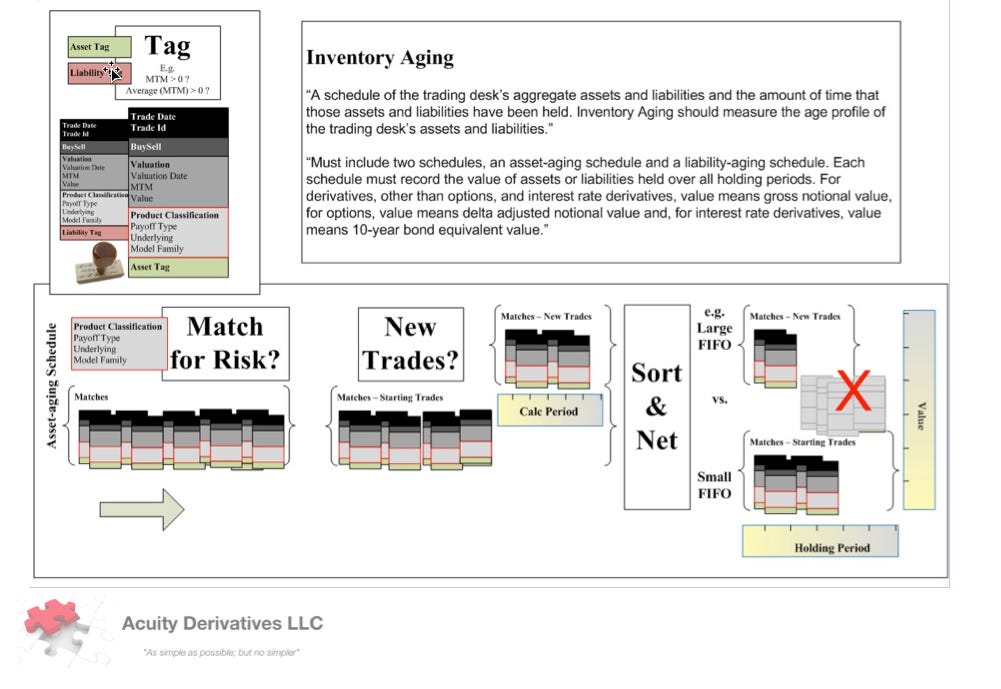

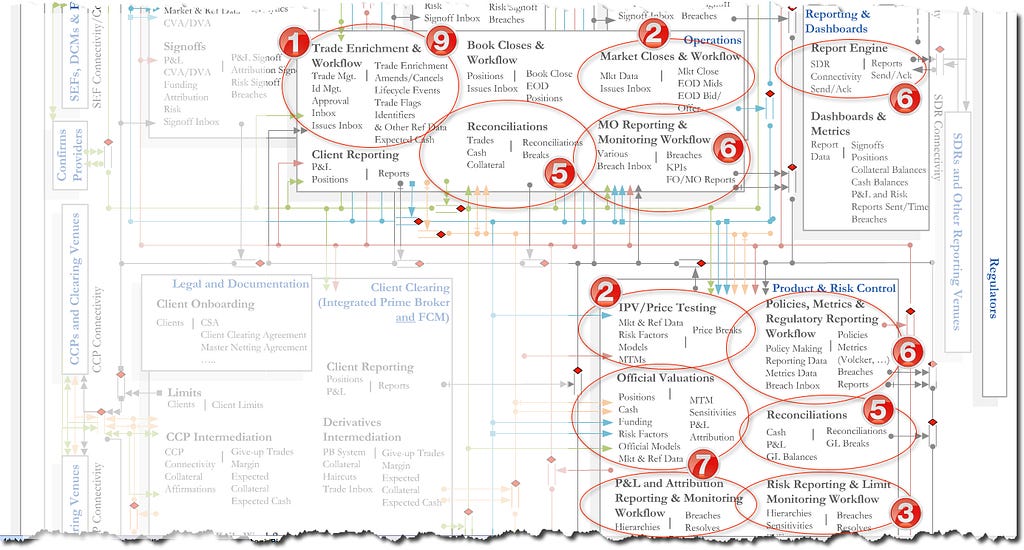

Product (Risk, Model, and Data) Classifications… an analogical t...

Classifying whisky is complex. There are many dimensions with which to group or cluster the varieties e.g.By ingredient composition (peat, malted barley, other grain, yeast, local water, local ...

Sep 24, 2018